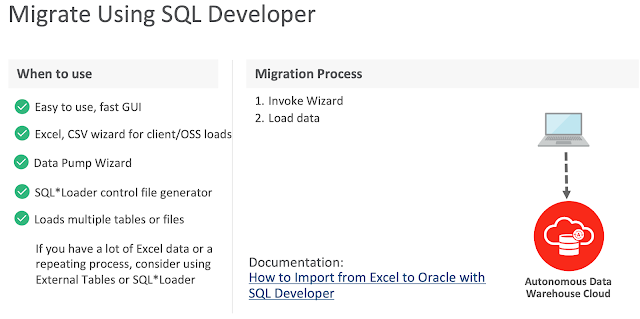

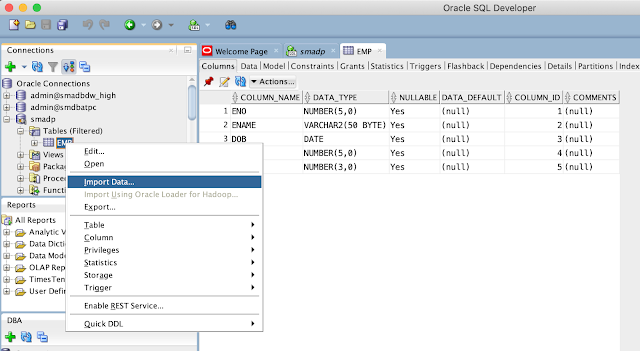

Below picture depicts various set of tools supported by Oracle to migrate your on-premise Oracle database to Autonomous Database:

Cloud migration path

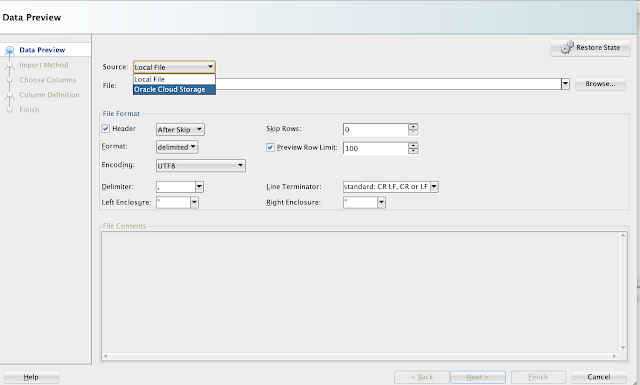

To be able to migrate the data, dump files must be placed in cloud storage, either from Oracle, Microsoft Azure, AWS S3 or Oracle cloud object storage.

Migrating with Oracle Data Pump

Oracle Data Pump offers flexible and fast data movement between Oracle databases. Data pump lets you import from dump files residing on cloud storage. Refer the following example to export on-premise database:

expdp sh/welcome123@mydb \

exclude=index,cluster,indextype,materialized_view,materialized_view_log,materialized_zonemap,db_link \

data_options=group_partition_table_data \

parallel=16 \

schemas=sh \

dumpfile=exp_MYDB_%u.dmp \

encryption_pwd_prompt=yes

Below are some guideline and recommend parameters to use with expdp:

- Schema level export for ADB migration

- Use parallelism to speed-up export job

- Exclude data types that are not supported in ADB with exclude parameter

- With data_options parameter, you can group portions into a non-partition table, recommended by ADB

Once the database is exported, move the dump files to Cloud Storage. In this blog, we will presume you have Oracle Cloud Object Storage option to put your dump files.

Download the latest Oracle client software on your desktop (if not exist) with also includes the latest Data Pump utilities. Before you start importing the database into ADB, ensure the following are in-place:

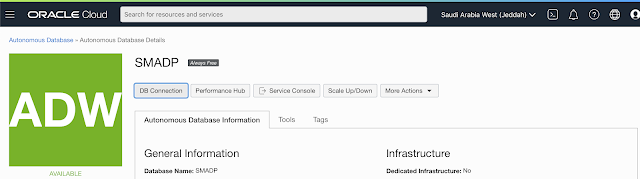

- An ADB database is configured, up and running

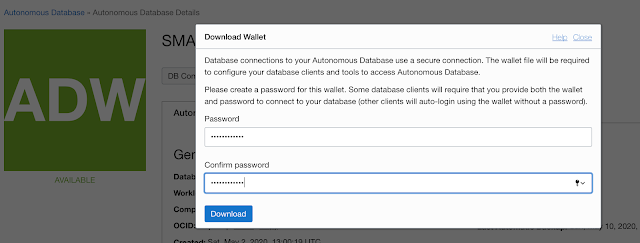

- You have downloaded the ADB connect credentials from OCI page for the ADB

- Export files are moved to Cloud storage

- Create cloud storage credentials in ADB

If you are using Oracle cloud object storage, you will have to create a bucket and place all your dump files.

Create cloud storage credentials in ADB referring below example:

BEGIN

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'DEF_CRED_NAME',

username => 'adwc_user@example.com',

password => 'Welcome12$'

);

END;

/

Once the credentials are created in ADB, run the imp command from your desktop referring the example below:

impdp admin/password@ADWC1_high \

directory=data_pump_dir \

credential=def_cred_name \

dumpfile= https://objectstorage.us-ashburn-1.oraclecloud.com/n/namespace-string/b/bucketname/o/export%u.dmp \

parallel=16 \

encryption_pwd_prompt=yes \

partition_options=merge \

transform=segment_attributes:n \

transform=dwcs_cvt_iots:y transform=constraint_use_default_index:y \

exclude=index,cluster,indextype,materialized_view,materialized_view_log,materialized_zonemap,db_link

If you have worked with ADB, you know that admin is the default administrator user. If you wanna use different user to import, then, you will have to create that user in the ADB with required privileges for import. You can choose the TNS service name from the credentials file you have downloaded. You must specify the object URL in the dump file parameter, which is the location of your bucket with dump files.

You may notice we didn't mention the log file in the impdp. To review the log file, you may have to move the file to your object storage using the syntax below:

BEGIN

DBMS_CLOUD.PUT_OBJECT(

credential_name => 'DEF_CRED_NAME',

object_uri => 'https://objectstorage.us-ashburn-1.oraclecloud.com/n/namespace-string/b/bucketname/o/import.log',

directory_name => 'DATA_PUMP_DIR',

file_name => 'import.log');

END;

/

Conclusion

In nutshell, we have learned about various set of tools supported by Oracle to migrate on-prime database to ADB and a walk through a procedure of migrate an on-premise database to ADB using data pumps with best practices for fast migrations. In the subsequent blog posts, we will learn about MV2ADB and other tools and their procedure to migrate to ADB.

References:

Refer some of below notes and links for more understanding about on-premise database migration to Oracle ADB.

Oracle Autonomous Database Schema Advisor (Doc ID 2462677.1)

https://docs.oracle.com/en/cloud/paas/autonomous-data-warehouse-cloud/user/load-data-data-pump.html#GUID-30DB1EEA-DB45-49EA-9E97-DF49A9968E24

https://www.oracle.com/middleeast/database/technologies/cloud-migration.html

https://www.oracle.com/webfolder/s/assets/webtool/cloud-migration-advisor/index.html

Note : Pictures & Diagrams copied from various Oracle presentations for eduction and demo purpose only.